Comparing the best AI Agent Frameworks (and which one you should pick)

.jpg)

Chances are you’re reading this article because you’ve been thrown around the hype of the internet searching for the best way to build an AI agent.

We both need to stop and pause and consider for a moment what an agent is, and why we might want a framework to build one.

An agent is defined as a “system or program that is capable of autonomously performing tasks on behalf of a user or another system.” (Source: IBM)

You can think of this as an LLM talking to itself, recursively prompting itself until it achieves its goal. However, this description only captures one aspect of the broader concept. When someone refers to an “agent” in 2025, they usually mean a GPT-powered assistant that has access to tools, memory and can operate autonomously until it reaches its goal.

- A recursively prompting LLM is still limited to the knowledge it was trained on and can only refine responses based on internal logic - thus when we refer to an agent, we (in this case of this article) refer to an assistant that has a system prompt (defining what it does) and access to tools.

- What are tools and how are they executed? The assistant can generate structured responses to your application if it decides it needs to execute a tool to reach it’s goal, and your application can then invoke it’s own methods that the response references.

- Has autonomy & task planning: Instead of responding to a single input like a chat with an LLM, it can break down tasks, iterate, and make decisions independently. This task prioritization and break down is a large part of the framework we will see.

In theory, building an AI agent from scratch requires considering a few things:

- Defining how tasks are structured and executed.

- Managing state and memory across agent runs.

- Handling concurrency and inter-agent communication.

- Ensuring scalability and efficiency.

A framework simplifies this by providing abstractions, best practices, and integrations with language models and tools.

But it’s important to understand, that ultimately this is all built upon the LLM and their API. This isn’t capable of doing any more than you copy-pasting queries and responses into a chat with an LLM - whether that chat has tools or not.

If you have a very particular agent / workflow in mind, then you certainly can build your agent from scratch without anyone else’s opinions about how it should be built. However, you’re going to end up building something similar to the below framework eventually, so let’s dive in.

As we traverse through this comparison, we will uncover terms and abstractions that are shared by many frameworks. Skipping ahead in this comparison may lead to gaps in your understanding, so I would recommend thinking along with me to get the full picture.

Language: TypeScript

Docs: https://the-pocket-world.github.io/Pocket-Flow-Framework/apps/

Github: https://github.com/The-Pocket-World/Pocket-Flow-Framework?tab=readme-ov-file

What you need to know: PocketFlow is maintained by a single developer, and aims to capture the single abstraction that all of the following frameworks have in common. To quote the documentation: “A Nested Directed Graph that breaks down tasks into multiple (LLM) steps, with branching and recursion for agent-like decision-making.” The entire framework is less than 200 lines of code - which makes it a great place to start exploring why we might want much larger dependencies like LangGraph.

Below you can see a diagram from the docs that summarize this core abstraction.

❌ Unsupported block (embed)PocketFlow’s core classes are the Node, Flow, and BatchFlow.

- Node’s represent a single unit of work with an LLM and extends the BaseNode class (see below).

- Node’s can be chained together using successors, which is known as a transition. The composition of these transitions with a starting node is known as a flow.

- A BatchFlow extends the flow, and orchestrates the execution of multiple flows and aggregates the results.

Here is an example of how a conditional branching flow might work.

javascriptclass ValidationNode extends BaseNode {async prep(sharedState: any) {return sharedState.data;}async execCore(data: any) {return data.isValid;}async post(prepResult: any, execResult: any, sharedState: any) {return execResult ? "valid" : "invalid";}}// Create nodesconst validator = new ValidationNode();const successHandler = new SuccessNode();const errorHandler = new ErrorNode();// Set up branchingvalidator.addSuccessor(successHandler, "valid");validator.addSuccessor(errorHandler, "invalid");// Create and run flowconst flow = new Flow(validator);await flow.run({data: { isValid: true }});

This validator node is not completely useful by itself - it would be used in a larger flow in order to compose a practical workflow. However, it demonstrates the concept perfectly. Here are the intended uses of the methods of the BaseNode class:

- prep: Prepares the node for execution by initializing any necessary state or resources.

- execCore): Executes the core logic of the node.

- post: Determines the next action to take based on the execution result.

Having a look at the post() method in the example, you can imagine substituting an LLM call in order to make this decision, and again in execCore.

One critique of this ideology, is that it is a simple state machine. The system can be in one or more states at a given time, and transition (with successors) to subsequent states. This can become quite complex as the number of transitions you have to account for increases. In many cases, it might make more sense to define a data structure (e.g. CodingTask or BlogPostWritingTask) and the deal with the flow of data and concurrency yourself.

However, with this sub-200 line framework , we can create simple agents, multi-agent workflows and RAG (Retrieval Augmented Generation) agents. So it’s about high time we consider something more fleshed out.

Language: Python

Docs: https://www.crewai.com/

Github: https://github.com/crewaiinc/crewai?tab=readme-ov-file

What you need to know: CrewAI is an open-source AI Agent framework that is composed of:

- Tasks - Tasks are assigned to agents for them to complete.

- Agents - Agents essentially “have a conversation with themselves” and have tools and roles assigned to them. A role is a text description of the agent’s expertise.

- Crews - contains any number of agents.

- Process - This is how the crew allocates and prioritizes tasks. You can for example, determine whether a task can be executed in parallel or independently.

What’s great about CrewAI is that everything can be declared in YAML. Your tasks, agents and crews can be written and exported in YAML - which is convenient and versatile.

CrewAI also integrates directly with Langchain’s suite of tools - which will be a big plus for many. It also has built in support for monitoring with OpenLit - which is open-source and can be self-hosted. Monitoring provides insight into LLM costs, exceptions, sequences of tasks, latency etc. Thus, with a monitoring setup like OpenLit, you can track your agents and crews over time, and adjust hyper-parameters (such as temperature) to fine-tune your agents.

You can also replace a given agent in your crew with, for example, a LangGraph agent or your own custom code. So the framework excels at high-level orchestration (through YAML/the simple UI) while still allowing the lower level customization.

The framework supports Human-in-the-Loop, which is where agents are able to request additional information or clarification when necessary during execution of a process. However, it does not support asking for human input during execution of a task, only at the end of a task, before returning the final result to the user.

CrewAI offers Time Travel (known as replay in CrewAI), which means you can re-run certains tasks from the latest kickoff (latest running of the crew) HERE

Lastly, memory is something you will see accounted for in all of these frameworks. CrewAI defines 4 types of memory:

- Short-Term Memory - This is stores recents interactions and outcomes using RAG and is used by agents during their current execution. This is used similarly to:

- Contextual Memory - This memory type is also used during current execution of an agent, but it is made up of the short-term, long-term and entity memory.

- Entity-Memory - Stores information about entities used during tasks.

- Long-Term Memory - Stores information from past executions.

So memory allows agents to gain greater contextual awareness, learn from past actions and understanding entities and the relationships between them.

Back to CrewAI…

The crux of this framework is that you need to be able to decompose your workflows into tasks. If what you want to accomplish cannot be expressed in the form of a task, this is not for you. For instance, if your goal is to make a crew for personalized healthcare management, monitoring health data from various sources and adapt treatment plans for a patient, this is not going to be optimal. Another example would be an autonomous vehicle agent. However, it’s safe to say most workflows can decomposed into a task.

CrewAI has an enterprise subscription plan where you get access to the UI studio amongst other things. The UI studio has an excellent GUI for visualizing and creating crews, as well as exporting a crew as standalone code, along with exporting as YAML.

However, there is an open-source project that has many of the same features as the enterprise UI studio, which could be self-hosted.

Language: Python, JavaScript

Docs: https://www.langchain.com/langgraph

Github:

What you need to know: LangGraph is possibly the most generic framework (besides PocketFlow), in that it doesn’t define crews or flows or anything of the sort.

As you may have guessed, LangGraph facilities agents being built / knowledge represented through graphs.

LangGraph and LangChain are considered the most widely known and documented frameworks in this space, so integrations with their tools (like CrewAI has above) is considered a pro. LangChain facilitates building applications with LLMs and chaining conversations together, whereas LangGraph is more about representing knowledge as a graph. LangGraph Extends LangChain’s core to enable cyclic, graph-based workflows. Agents are nodes with customizable edges for conditional transitions, loops, and parallel processing.

Imagine building a chatbot that answers questions about a specific domain (e.g., medical information). You could use LangGraph to create a knowledge graph of medical terms and relationships. Then, you could use LangChain to manage the conversation flow, prompting the language model with relevant data from the graph to generate accurate and contextually appropriate responses.

Ultimately, you can build anything that you need for your workflow: Router graphs, ReAct Agents graphs (loop between reasoning and tool execution), and Reflection Agent Graphs (self-critique outputs and iterate). You can view a nice article an these architectures here: https://medium.com/towards-data-science/navigating-the-new-types-of-llm-agents-and-architectures-309382ce9f88.

The learning curve is definitely the steepest of all of the frameworks explored here, as you need to decide what architecture you want to use to build an agent. State managemetn is complex, and many consider it to require significant boilerplate code for basic workflows. However, this is also it’s strength - for complicated workflows, LangGraph is considered the best. It supports highly-customizable human-in-the-loop, time travel, streaming & memory.

Language: Python, C#

What you need to know: Autogen is based on the actor model - which facilitates messaging between actors in a system. In the actor model, the actor can literally be anything, and is the core unit of execution - when work is being done it is being done by an actor. In fact, a state machine can also be encapsulated in the actor paradigm, as each state in the machine can be encapsulated as an actor that behaves according to that state. All that an actor cares about is how to deal with messages that arrive, and how to send messages to other actors.

In Autogen, an agent is actor, and an agent can send messages to other agents (and use tools like an assistant). Autogen facilitates the setup of conversations between agents in different patterns / hierarchies that will keep going until a terminate condition is met (the task is considered completed according to some standard).

The framework also makes it easy for agents to generate and run code in docker containers. Their docs have a great example of an agent executing python code generated by another agent - which can seen here.

Like crewAI, Autogen can only ask for human input after completing a task before it returns the task to the user (human-in-the-loop). There’s no support for time-travel, but it does have a companion repository - Autogen Studio - which allows you to quickly prototype agents and workflows, as well as test them in a playground. No other framework has open-source first-party support for an interface like this.

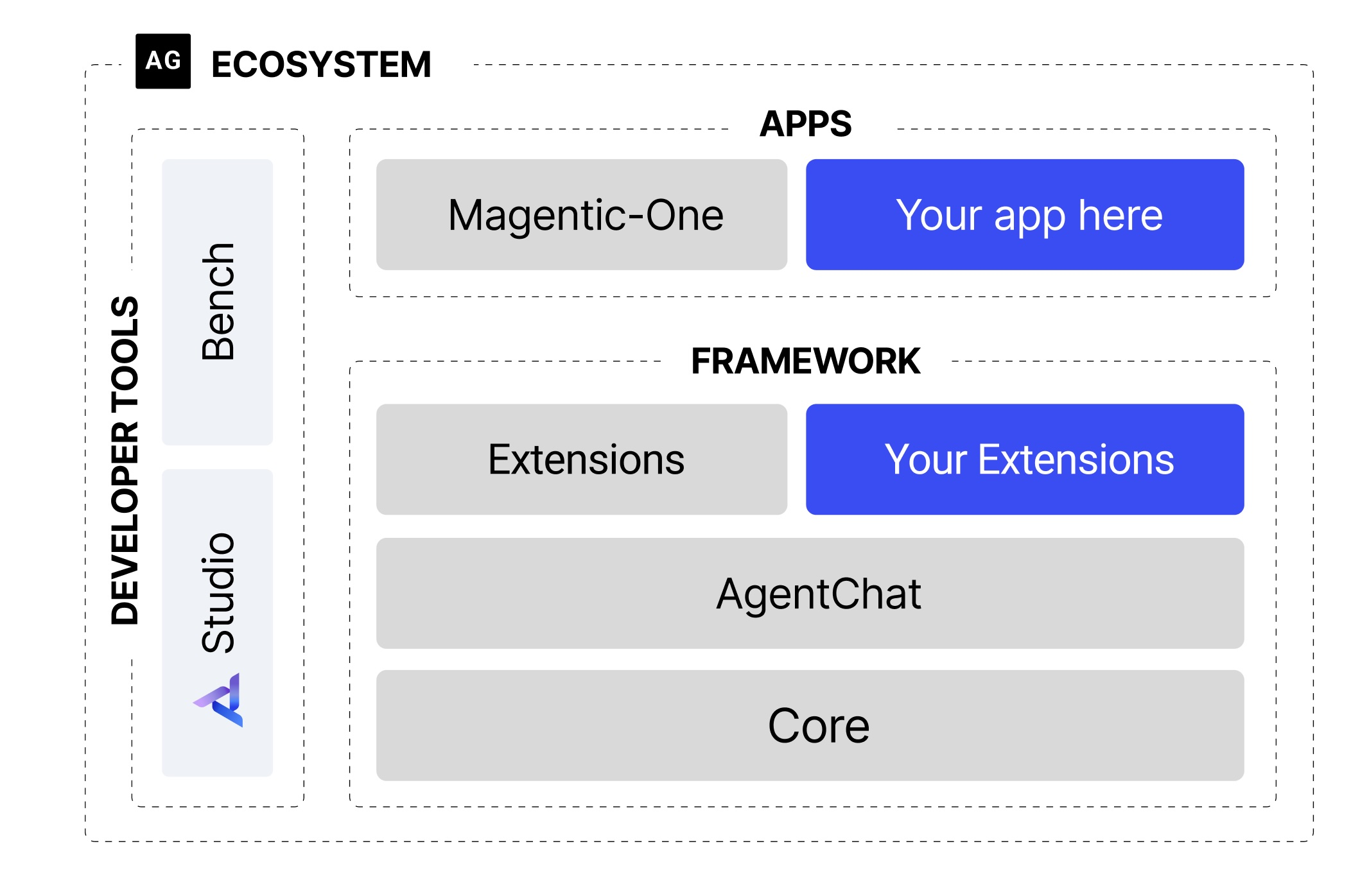

In fact, here is the full ecosystem:

- Core - Agent communication etc.

- AgentChat - Build on top of Core, and provides patterns for pre-defined agents, teams and more.

- Extensions - Enables various LLM support and code execution. This is where you can integrate RAG, vector DBs, Langchain etc.

The app listed above, Magentic-One, “is a generalist multi-agent system for solving open-ended web and file-based tasks across a variety of domains.” Magentic-One comes pre-installed with AgentChat, which is a feature none of the other frameworks offer.

There is another open-source project, FastAgency, that has the goal of being a “production-ready” (whatever that means in 2025) API that allows you to deploy your Autogen workflow to either a chat interface or REST API with minimal code. This sounds great, but this is not natively supported of course. This is one of the reasons Mastra (below) peaked my interest.

While Autogen excels at creating conversational agents capable of working together, it lacks an inherent concept of process. In Autogen, orchestrating agents' interactions requires additional programming, which can become complex and cumbersome as the scale of tasks grows.

However, teams can be instantiated (groups of agents) through RoundRobinGroupChat and SelectorGroupChat which are still very powerful (read up on the respective links).

Lastly, this should be obvious, but Autogen is a Microsoft product, which means it has good integrations with other Microsoft services and products, and might be preferable for enterprises requiring auditability - or those that are simply engulfed in the Microsoft suite.

Language: TypeScript

What you need to know: Mastra sparked my interest as being a TS agent framework, similar to LangGraph in it’s approach, but you can get started in a few minutes running:

javascriptnpx create-mastra@latest

After which, you can start the Mastra dev server, and you’ll have an API, and UI with ability to customize your agents, workflows, tools, refine prompts with AI, view traces (tool calls that were made, text generated etc.)

Mastra is great if you’re using React or NextJs, and what to get something up and running fast. However, the framework is built upon the Vercel AI SDK (which I, and many others, avoid like the plague).

Language: Python

What you need to know: PydanticAI is built by the Pydantic team which is a validation layer used by all of the frameworks listed above and more.

It’s built upon ReAct agents, which as mentioned above, loop between reasoning and tool execution. These have a:

- system prompt

- tools

- dependencies

- LLM

- Structured Output

Here is an example of defining an agent and a tool:

pythonroulette_agent = Agent('openai: gpt-40' deps_type=int,result_type=bool,→system_prompt=('Use the 'roulette_wheel' function to see if the ''customer has won based on the number they provide.'),@roulette_agent.toolasync def roulette_wheel(ctx: RunContext[int], square: int) -› str:"'"check if the square is a winner""" return 'winner' if square == ctx.deps else 'loser'

While PydanticAI does support various multi-agent setups, you’ll probably want to stay clear of graph-based, since it might well be easier to do something similar using code (i.e. programmatic agent hand-off):

“There are roughly four levels of complexity when building applications with PydanticAI:

Here are the docs relating to graphs (and why they’re like a nail-gun, other multi-agent workflows are a sledgehammer, and agents are a hammer).

Mulit-agent delegation implies that cycles are not supported. So a tool that is used by one agent (Agent Alice), can reference another agent (Agent Smith), and Agent Smith can then pass that response back to the Agent Alice after completion. So you can’t have a situation where Agent Smith directly calls Agent Alice (via a tool) and then Agent Alice calls Agent Smith again in a cycle. To implement that you would need to use a graph-based control flow of programmatic hand-off.

PydanticAI doesn’t have support for any form of memory, or human-in-the-loop at this stage.

It’s clear that the majority of data manipulation, machine learning, statistical analysis and now AI agent frameworks have by far the majority of the ecoystem on Python. If you were on the search (like me) for a Typescript framework that is as fully featured as any of the Python frameworks, you’re mostly out of luck.

Hopefully the above analysis gives you enough insight and understanding so you can choose what framework would best suit your use-cases (or not - straight Python is certainly doable).

Personally, my workflows align very well with the task-based approach that CrewAI offers, and their sequential/hierarchical organization of agents. I don’t need so much flexibility and extensibility, so I see LangGraph resulting in lots of boilerplate code. CrewAI’s ability to be defined in YAML (for basic workflows) is already highly appealing to me.

Autogen’s easy learning curve, dev-tools and apps (Magentic-One) made it land a close second in my opinion, and for my needs.

However, in this age of exponential development, you must realize that it might take merely 3 weeks after this article is published for all of this to be outdated. The important thing is that you pick one, and don’t start researching all the plethora of other frameworks like Agno and LlamaIndex (which are also great!). Unless you know you have very specific use-cases, any one of the above frameworks will certainly be more than enough for whatever it is you are trying to build.

Systematize your audience growth as a solo founder

Sign up to get

LinkedIn Made Simple.

Your First And Final Guide to LinkedIn growth in 2025 🚀

.jpg)